Main purpose of this review is to provide the comparative study of the existing contributions of implementing parallel image processing applications with their benefits and limitations. The main problem is that it is generally time consuming process Parallel Computing provides an efficient and convenient way to address this issue.

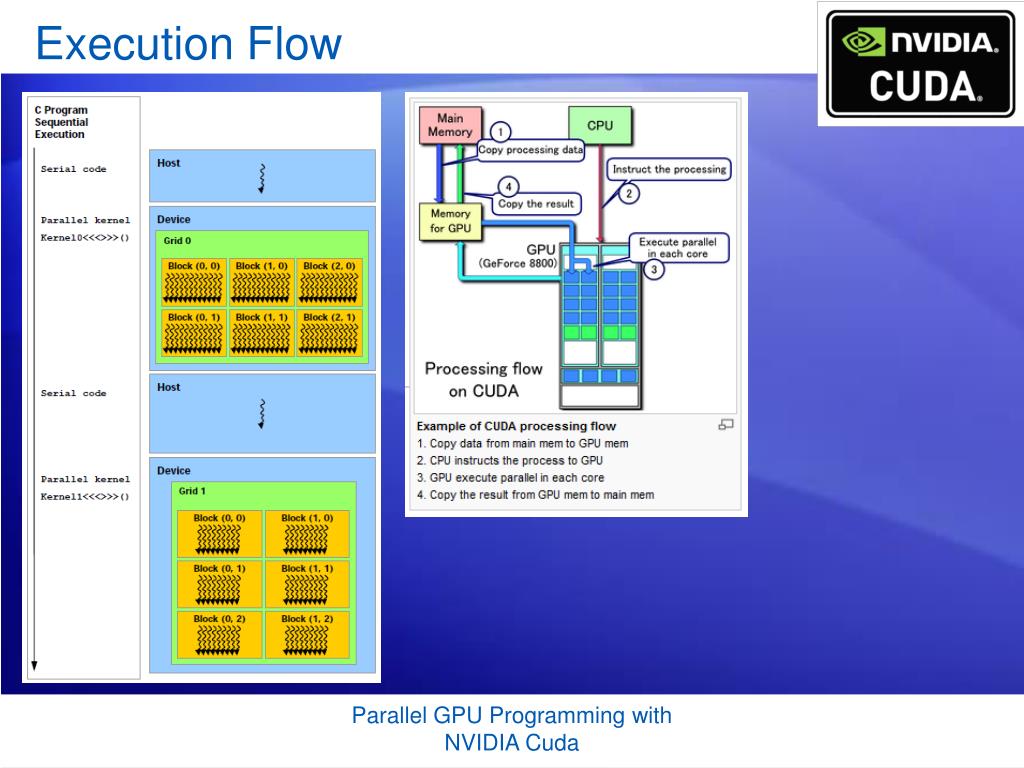

It is effectively used in computer vision, medical imaging, meteorology, astronomy, remote sensing and other related field. The aim of digital image processing is to improve the quality of image and subsequently to perform features extraction and classification. Especially we demonstrate the efficiency of our implementations of our computer vision algorithms by speedups obtained for all our implementations especially for some tasks and for some image sizes that come up to 80 and 722 acceleration times. We realize high and significant accelerations for our computer vision algorithms and we demonstrate that using CUDA as a GPU programming language can improve Efficiency and speedups. We introduce a high-speed computer vision algorithm using graphic processing unit by using the NVIDIA’s programming framework compute unified device architecture (CUDA). After that we will dive on GPU Architecture and acceleration used for time consuming optimization. We will report on parallelism and acceleration in computer vision applications, we provide an overview about the CUDA NVIDIA GPU programming language used. In this paper, we propose a GPU-accelerated method to parallelize different Computer vision tasks. Using graphic processing units (GPUs) in parallel with central processing unit in order to accelerate algorithms and applications demanding extensive computational resources has been a new trend used for the last few years. The work also shows that the overall accuracy of the system is not affected by the parallelization. The parallelization has been achieved over the multiple cores of CPU and many cores of GPU. The work compares several convolutional neural network (CNN) models and aims in parallelizing them using a distributed framework that is provided by the python library, RAY. This work deals with the widely accepted FashionMNIST (modified national institute of standards and technology database) dataset, having a set of sixty thousand images for training a model and another popular dataset of MNIST for handwritten numbers. However, the process of image classification turns out to be time-consuming. There have been several research works that have been done in the past and are also currently under research for coming up with better-optimized image classification techniques. It covers a vivid range of application domains like from garbage classification applications to advanced fields of medical sciences. right?īut why such a big 2D grid? I would have 256*4096 = 1,048,576 threads with that grid.Image classification is a widely discussed topic in this era.

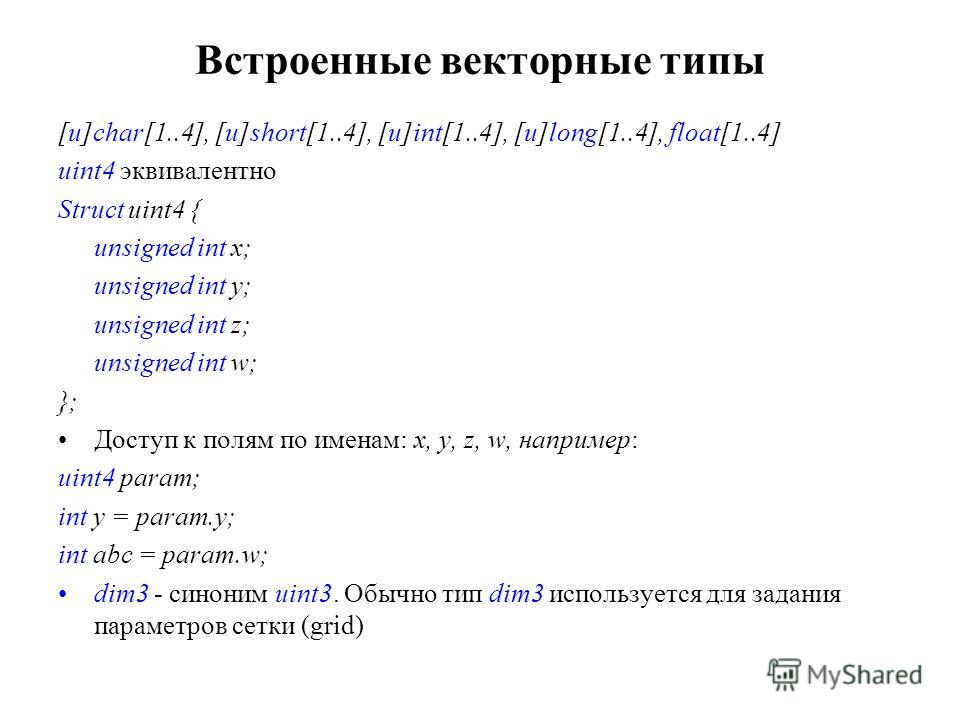

Is that right?ĭim3 dimGrid( N/dimBlock.x, N/dimBlock.y ) // Means to me: N/dimBlock.x = 1024/16 = 64 and N/dimBlock.y = 64 -> 64*64 = 4096 Blocks per grid. I do understand everything but not the give block and grid parameters.ĭim3 dimBlock( blocksize, blocksize ) // Means to me: 16*16 = 256 Threads per block.

Int j = blockIdx.y * blockDim.y + threadIdx.y ĬudaMemcpy( c, cd, size, cudaMemcpyDeviceToHost ) ĬudaFree( ad ) cudaFree( bd ) cudaFree( cd )

Int i = blockIdx.x * blockDim.x + threadIdx.x _global_ void add_matrix( float* a, float *b, float *c, int N ) I am new to CUDA C GPGPU programming and found an example in the following pdf-file:

0 kommentar(er)

0 kommentar(er)